Reliable IaC and CI/CD pipeline with Terraform and Cloud Build on GCP

There are many tutorials and solutions about how to setup infrastructure as a code on GCP and there are also many solutions for CI/CD pipeline but none of them about how these 2 interconnect and how the output of the infrastructure setup can be used by the CI/CD pipeline.

TL;DR

You can find the seed project and the documentation at github.com/gabihodoroaga/infrastructure-cicd.

The solution

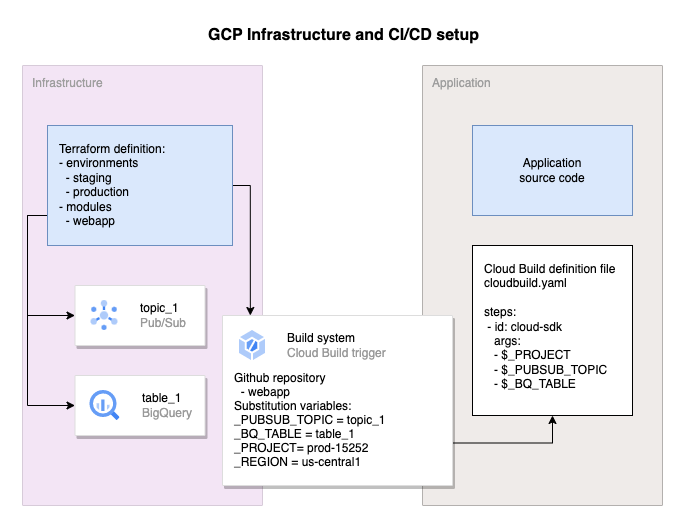

This solution splits all the resources into 2 zones:

- The infrastructure zone which is responsible for management of all required resources for an application to run, like Pub/Sub topics, BigQuery table, Cloud Sql Instances and the build system for the application, the Cloud Build trigger.

- The application development zone which is responsible for management of the application source code and the deployment of the application in production, in our case the cloud build definition file.

The 2 zones mentioned above are materialized into 2 or more repositories.

This solution follows 5 principles in addition to the standard IaC and CI/CD standards:

- Flexibility is the first principle and in our case refers to the ability to choose which part (infra or development) is responsible for maintaining a resource.

- To deploy a new version of an application the infrastructure repository should not be modified. This is to avoid the unnecessary extra step to update the infrastructure repository after the application repository has been updated.

- If a rollback of an application version is required this should be done by triggering a new deployment using a previous commit of the application repository and the infrastructure repository should not be modified.

- The application deployment pipeline must allow pre and post deployment steps (e.g. database updates and schema migrations)

- The output of the infrastructure setup, for example a bigquery table name or a cloud sql instance name, etc must be available at the build time of the application.

The design overview

The infrastructure project setup

- environments

- production

backend.tf

main.tf

- staging

main.tf

backend.tf

- modules

- webapp

- main.tf

- output.tf

- variable.tf

- versions.tf

In this project everything is a module and to be more specific every resource that an application needs to run must be encapsulated into a module.

Additionally we have 2 environments: staging and production. Each environment has his own folder, his own project and his own bucket for the terraform state.

In the main.tf of each environment we add the modules and of course we will need to provide some variables.

By keeping every resource definition in modules we can be 100% sure that when everything will be pushed to the

production environment it will just work, and it will allow testing the infrastructure configuration

changes in staging before going to production.

We will use also 2 branches:

- main - this branch will be deployed in staging environment

- production - this branch will be deployed to production environment

It is recommended that the production branch to be marked as protected and only accept updates using a pull request.

The same model with 2 branches will be used for the application repositories also.

The best way to explain this design is to walk you through the example step by step.

The setup

Setup you variables

PROJECT_STG=blog-infra-staging-1

PROJECT_PROD=blog-infra-production-1

Create the 2 projects

gcloud projects create $PROJECT_STG --name="Staging"

gcloud projects create $PROJECT_PROD --name="Production"

Create the 2 buckets used for maintaining the terraform state

gcloud storage buckets create "gs://$PROJECT_STG-tfs"

gcloud storage buckets create "gs://$PROJECT_PROD-tfs"

Fork the repositories.

Patch the infrastructure-cicd project in order to match you environment.

These are the files and properties that need to be updated:

- environments/production/backend.tf - update the bucket property to match your production bucket name

- environments/production/main.tf - update the project property to match you production project name

- environments/staging/backend.tf - update the bucket property to match your staging bucket name

- environments/staging/main.tf - update the project property to match you staging project name

Push your changes.

Login to your GCP Console and enable billing. Check the official documentation https://cloud.google.com/billing/docs/how-to/modify-project if you have to.

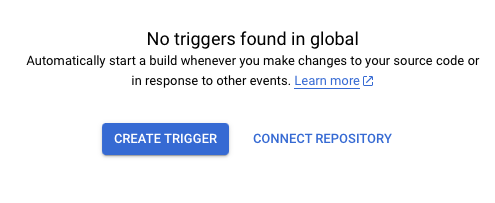

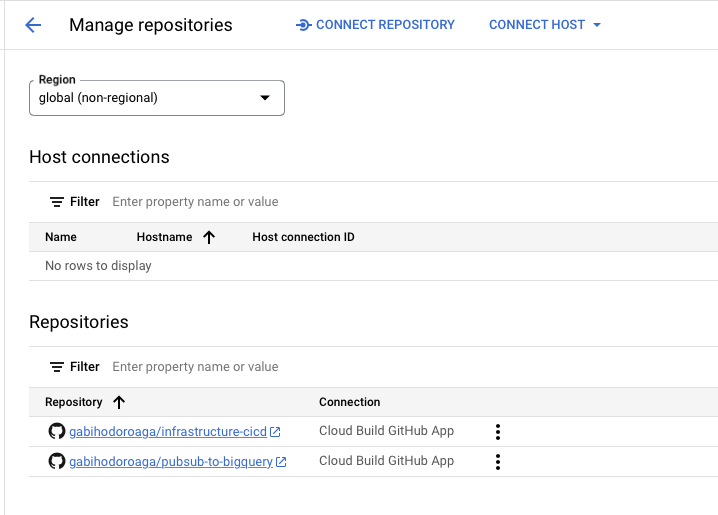

Navigate to Cloud Build section, enable the api and connect the 2 repositories. You have to do it for both projects: staging and production.

Cloud Build -> Triggers -> Connect Repository

Do not use the “repositories (2nd gen)” option because it s still in preview and I don’t know how it works.

If you did this process correctly you should find the 2 repositories in you “Manage repositories” like in the picture bellow

Next we need to create the build trigger for infra for each of the projects.

Staging, but first set you repo owner name

REPO_OWNER=[your_github_user]

gcloud beta builds triggers create github \

--region=global \

--repo-name=infrastructure-cicd \

--repo-owner=$REPO_OWNER \

--branch-pattern='^main$' \

--build-config=cloudbuild-staging.yaml \

--project $PROJECT_STG

and then production

gcloud beta builds triggers create github \

--region=global \

--repo-name=infrastructure-cicd \

--repo-owner=$REPO_OWNER \

--branch-pattern='^production$' \

--build-config=cloudbuild-production.yaml \

--require-approval

--project $PROJECT_PROD

You can update the trigger names and description from the GCP Console.

Before triggering an update to our repo we need to adjust the Cloud Build service account permissions.

For Staging:

PROJECT_NUMBER=$(gcloud projects list --filter="$PROJECT_STG" --format="value(PROJECT_NUMBER)")

CB_SERVICE_ACCOUNT="$PROJECT_NUMBER@cloudbuild.gserviceaccount.com"

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/editor"

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/iam.serviceAccountUser"

# if you need to deploy a cloud run service

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/run.admin"

# if you need to create a service account

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/iam.serviceAccountAdmin"

# if you need to create secrets

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/secretmanager.admin"

# if you need to access secrets

gcloud projects add-iam-policy-binding $PROJECT_STG \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/secretmanager.secretAccessor"

For Production:

PROJECT_NUMBER=$(gcloud projects list --filter="$PROJECT_PROD" --format="value(PROJECT_NUMBER)")

CB_SERVICE_ACCOUNT="$PROJECT_NUMBER@cloudbuild.gserviceaccount.com"

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/editor"

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/iam.serviceAccountUser"

# if you need to deploy a cloud run service

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/run.admin"

# if you need to create a service account

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/iam.serviceAccountAdmin"

# if you need to create secrets

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/secretmanager.admin"

# if you need to access secrets

gcloud projects add-iam-policy-binding $PROJECT_PROD \

--member="serviceAccount:$CB_SERVICE_ACCOUNT" \

--condition=None \

--role="roles/secretmanager.secretAccessor"

Or, you can add the owner role if you are too lazy to run all these commands, but I do not recommend this.

Next let’s update the infra project and push the changes to main and see what happens.

You should update the github repo owner for the pubsub-to-bq service anyway

modules/pubsub-to-bq/main.tf -> google_cloudbuild_trigger -> pubsub_to_bq -> github -> owner

with your github username.

Push the changes to the main branch.

If everything works well you should see all the staging resources created and also the Cloud Build trigger

for the pubsub-to-bq service.

Now update and push changes to the main branch of the pubsub-to-bq repository.

You should see your service deployed in cloud run.

gcloud run services list --project $PROJECT_STG

Now let’s move everything to production.

For infrastructure-cicd project create and push a new branch called production

git checkout -b production

git push -u origin production

You should manually approve the build and then you should see your resources created in the production project.

Same thing for the pubsub-to-bq

git checkout -b production

git push -u origin production

Don’t forget to manually approve the build.

From this point forward the recommended way of pushing updates to production is by using a pull request.

Done. You are now ready to work on your application development.

Next comes the very boring part where we can walk though the 5 principles that we mention at the beginning.

- Flexibility - The developer has the option to put the runtime and the build variables in the application repo, in the cloudbuild.yaml file or in the infra repo. Whatever works best.

- New version - For a new version of the

pubsub-to-bqall you have to do is to update the service repo. The infra will not be touched - Rollback version - For a service rollback you can easily trigger a previous build.

- Complex deployment steps - Because the deployment steps are declared inside the application repository and because Cloud Build allows any docker image to be used as a step you can configure any number pre and post deployment step.

- Build time variables - Because the infra project is responsible for providing the build system (the cloud build trigger) it is easy to push the output of the infra project into the build system of the application using the substitution variables.

This setup works very well when the developers are the ones responsible for running the services in production and there is a very good collaboration between the devops and developers. It might not be the best solution in an environment where the devops team takes care of the deployment and running of a service and where there is a strong requirement that every aspect of a deployment of a service must be approved by the DevSecOps team.

Conclusion

The reliable word from the title of this post comes from the fact that I’m using this setup in a real production environment with 30 different resource types and 20+ services and it just works. I had almost 0 time spent fixing platform problems. I call this reliable.