Ingress-nginx on GKE

The first choice on GKE for the ingress is the kubernetes/ingress-gce which will automatically deploy and external https load balancer on GCP. But, there are situations where an ingress-nginx must be used especially when migrating workloads from other providers to GCP. By default the ingress-nginx will deploy as service type “LoadBalancer” which on GCP will create a TCP load balancer. Even so this is a good enough solution for most of the workload , it comes with some major limitations: cannot use backend options like Cloud Armor and Cloud CDN, advanced load balancer capabilities like weighed backend services.

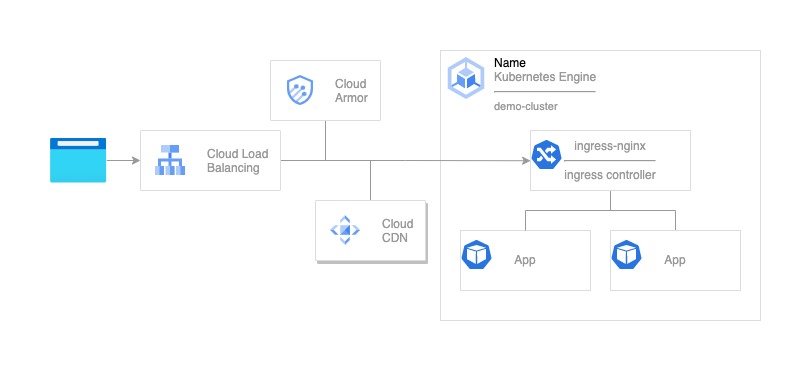

In order to benefit from both worlds on GKE it is possible to deploy the ingress-nginx together with a custom external load balancer.

TL;DR

You can find the full setup script here.

The solution overview

The setup

First let’s define the variables.

PROJECT_ID=$(gcloud config list project --format='value(core.project)')

ZONE=us-central1-a

CLUSTER_NAME=demo-cluster

Then add the GKE cluster

gcloud container clusters \

create $CLUSTER_NAME \

--zone $ZONE --machine-type "e2-medium" \

--enable-ip-alias \

--num-nodes=2

the --enable-ip-alias enables the VPC-native traffic routing option for your cluster. This option creates and attaches additional subnets to VPC, the pods will have IP address allocated from the VPC subnets, and in this way the pods can be addressed directly by the load balancer aka container-native load balancing.

For deploying ingress-nginx we will use helm. Helm is installed by default in Cloud Shell.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

The default installation of this ingress-nginx controller service is configured to use the LoadBalancer option, this will automatically create a tcp load balancer for you, but in this case this is not the expected behavior. So we need to adjust the helm configuration.

Create a file values.yaml.

cat << EOF > values.yaml

controller:

service:

type: ClusterIP

annotations:

cloud.google.com/neg: '{"exposed_ports": {"80":{"name": "ingress-nginx-80-neg"}}}'

EOF

Install the ingress-nginx.

helm install -f values.yaml ingress-nginx ingress-nginx/ingress-nginx

Create a very simple web application deployment (also nginx but it not the ingress).

cat << EOF > dummy-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dummy-deployment

spec:

selector:

matchLabels:

app: dummy

replicas: 2

template:

metadata:

labels:

app: dummy

spec:

containers:

- name: dummy

image: nginx:latest

ports:

- name: http

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: dummy-service

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 80

selector:

app: dummy

EOF

# apply the configuration

kubectl apply -f dummy-app.yaml

The next step is to configure an kubernetes ingress the use our configured ingress-nginx

cat << EOF > dummy-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dummy-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: ""

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: dummy-service

port:

number: 80

EOF

# apply the configuration

kubectl apply -f dummy-ingress.yaml

Using the annotations on the ingress object you can specify what type the type of the ingress nginx or gce. The default if gce on GKE.

The next steps are taken form my other tutorial in order to expose the ingress-nginx using a custom external load balancer.

Find the network tags of the cluster.

NETWORK_TAGS=$(gcloud compute instances describe \

$(kubectl get nodes -o jsonpath='{.items[0].metadata.name}') \

--zone=$(kubectl get nodes -o jsonpath="{.items[0].metadata.labels['topology\.gke\.io/zone']}") \

--format="value(tags.items[0])")

Configure the VPC firewall.

gcloud compute firewall-rules create $CLUSTER_NAME-lb-fw \

--allow tcp:80 \

--source-ranges 130.211.0.0/22,35.191.0.0/16 \

--target-tags $NETWORK_TAGS

Create health check configuration.

gcloud compute health-checks create http app-service-80-health-check \

--request-path /healthz \

--port 80 \

--check-interval 60 \

--unhealthy-threshold 3 \

--healthy-threshold 1 \

--timeout 5

Create the backend service.

gcloud compute backend-services create $CLUSTER_NAME-lb-backend \

--health-checks app-service-80-health-check \

--port-name http \

--global \

--connection-draining-timeout 300

Logging is not enabled by default.

gcloud compute backend-services update $CLUSTER_NAME-lb-backend \

--enable-logging \

--global

Add the NEG to the backend service.

gcloud compute backend-services add-backend $CLUSTER_NAME-lb-backend \

--network-endpoint-group=ingress-nginx-80-neg \

--network-endpoint-group-zone=$ZONE \

--balancing-mode=RATE \

--capacity-scaler=1.0 \

--max-rate-per-endpoint=1.0 \

--global

Setup the frontend and the load balancer

gcloud compute url-maps create $CLUSTER_NAME-url-map \

--default-service $CLUSTER_NAME-lb-backend

gcloud compute target-http-proxies create $CLUSTER_NAME-http-proxy \

--url-map $CLUSTER_NAME-url-map

gcloud compute forwarding-rules create $CLUSTER_NAME-forwarding-rule \

--global \

--ports 80 \

--target-http-proxy $CLUSTER_NAME-http-proxy

The the setup

IP_ADDRESS=$(gcloud compute forwarding-rules describe $CLUSTER_NAME-forwarding-rule --global --format="value(IPAddress)")

echo $IP_ADDRESS

curl -s -I http://$IP_ADDRESS/

Additional info

How to get he client IP address

When ingress-nginx is deployed using the ClusterIP option the header X-Forwarded-For will have

the address of the GCP load balancer and not the address of the actual client. The load balancer set

the header correctly with the IP of the client. Luckily the ingress-nginx saves the original X-Forwarded-For

header into X-Original-Forwarded-For header and this can be accessed from the final web service.

Thanks to Robin Pecha https://github.com/robinpecha for sharing this with me.

How to use this setup in a multi zonal cluster

NEGs (Network Endpoint Group) are zonal resources and if you want to use this setup for a regional cluster all you have to do is to create one NEG for each zone and add the NEGs to the backend service. The GCP load balancer will choose the closest NEG.

REGION=us-central1

gcloud container clusters create $CLUSTER_NAME \

--region $REGION \

--enable-ip-alias \

--num-nodes=1

Configure your the ingress-nginx helm deployment

cat << EOF > values.yaml

controller:

service:

type: ClusterIP

annotations:

cloud.google.com/neg: '{"exposed_ports": {"80":{"name": "ingress-nginx-80-neg"}}}'

replicaCount: 3

EOF

Note: You can play around with the pod affinity option to make sure you have one controller in each zone. This question on Stackoverflow is a good start Multizone Kubernetes cluster and affinity.

After deployment you you have 3 NEGs one for each zone.

Add the NEGs to the backend service.

ZONE2=us-central1-f

ZONE3=us-central1-c

gcloud compute backend-services add-backend $CLUSTER_NAME-lb-backend \

--network-endpoint-group=ingress-nginx-80-neg \

--network-endpoint-group-zone=$ZONE2 \

--balancing-mode=RATE \

--capacity-scaler=1.0 \

--max-rate-per-endpoint=1.0 \

--global

Cleaning up

In order to remove all the resources created and avoid unnecessary charges, run the following commands:

# delete the forwarding-rule aka frontend

gcloud -q compute forwarding-rules delete $CLUSTER_NAME-forwarding-rule --global

# delete the http proxy

gcloud -q compute target-http-proxies delete $CLUSTER_NAME-http-proxy

# delete the url map

gcloud -q compute url-maps delete $CLUSTER_NAME-url-map

# delete the backend

gcloud -q compute backend-services delete $CLUSTER_NAME-lb-backend --global

# delete the health check

gcloud -q compute health-checks delete app-service-80-health-check

# delete the firewall rule

gcloud -q compute firewall-rules delete $CLUSTER_NAME-lb-fw

# delete the cluster

gcloud -q container clusters delete $CLUSTER_NAME --zone=$ZONE

# delete the NEG

gcloud -q compute network-endpoint-groups delete ingress-nginx-80-neg --zone=$ZONE

Conclusion

The fact the all these components are interchangeable and can be configured in a way that can work together also is amazing. You can use the right tool for the right job.