Theia - Build your own IDE

The kubernetes/ingress-gce project is a special one because you cannot run or test your changes on your local machine. If you are not on GCE and the metadata server is not accessible the glbc process exists with error. So, your only option is to write your changes locally, build, push image to the registry and redeploy the controller. The whole process takes about 5 minutes and if you forgot to put a klog at the important places you have to build, push deploy again, and again. Boring …

Unless, there is a way to build, and run on the spot, in the cluster.

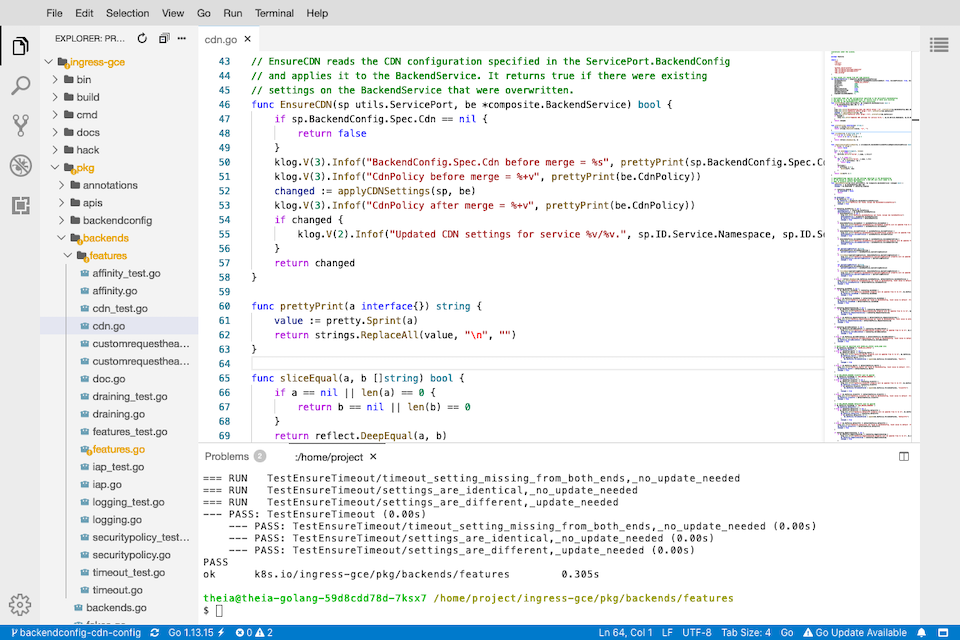

A while ago I found this product Theia and I am using it for all of my projects as a replacement of Visual Studio Code. And it works very well.

In this tutorial I will show you how to deploy kubernetes/ingress-gce (aka GLBC or l7-controller) together with Theia and update, build, run and debug your code directly on a live cluster.

In this way, the speed of your development is increased significantly.

TL;DR

If you already did this and you know exactly what the script is doing, you can download and run it

curl https://gist.githubusercontent.com/gabihodoroaga/eb0f9fc0d2681f20239fef22d7479f2e/raw/0f8a1de5d5e76a79d64b50684269dafd9ae7935a/deploy-glbc-theia.sh \

--output deploy-glbc-theia.sh

# edit file to set the variables

and run

chmod +x deploy-glbc-theia.sh

./deploy-glbc-theia.sh

Prerequisites

- GCP project with billing enabled. If you don’t have, one then sign-in to Google Cloud Platform Console and create a new project

- Access to a standard internet browser

Setup

An overview of the solution:

- download the required files from github.com

- setup a cluster

- disable the default GLBC plugin

- deploy theia-golang to your cluster

- clone, update, run with Theia

First let’s define some variables:

ZONE=us-central1-a

CLUSTER_NAME=demo-gce-cluster

PROJECT_ID=`gcloud config list --format 'value(core.project)' 2>/dev/null`

REGISTRY="gcr.io/$PROJECT_ID"

GCP_USER=`gcloud config list --format 'value(core.account)' 2>/dev/null`

Download the files required to setup your cluster from this Gist

curl https://codeload.github.com/gist/a5644451e1d309ad40b1312eb2369fe6/zip/70d0ec9fd78834ba4662ac7ee1ca46584d3d026c \

--output deploy-ingress-gce.zip

unzip deploy-ingress-gce.zip

mv a5644451e1d309ad40b1312eb2369fe6-70d0ec9fd78834ba4662ac7ee1ca46584d3d026c \

deploy-ingress-gce

cd deploy-ingress-gce

Next, let’s create a cluster,

gcloud container clusters \

create $CLUSTER_NAME \

--zone $ZONE --machine-type "n2-standard-2" \

--enable-ip-alias \

--num-nodes=1

and get the credentials for the local machine to connect to the cluster,

gcloud container clusters get-credentials $CLUSTER_NAME \

--zone $ZONE

grant permission to current GCP user to create new k8s ClusterRole’s,

kubectl create clusterrolebinding one-binding-to-rule-them-all \

--clusterrole=cluster-admin --user=${GCP_USER}

and store the nodePort for default-http-backend

NODE_PORT=`kubectl get svc default-http-backend -n kube-system -o yaml \

| grep "nodePort:" | cut -f2- -d:`

echo $NODE_PORT

We must disable the default GLBC from this cluster

gcloud container clusters update ${CLUSTER_NAME} --zone=${ZONE} \

--update-addons=HttpLoadBalancing=DISABLED

This will take a while because it requires restarting the master node.

Make sure the pod and the service for default-backend do not exist anymore

kubectl get svc -n kube-system | grep default-http-backend

kubectl get pod -n kube-system | grep default-backend

You should not get anything back from the above commands.

Create a new service account for GLBC and give it a ClusterRole allowing it access to API objects.

kubectl apply -f rbac.yaml

Generate the default-http-backend.yaml from the template

sed "s/\[NODE_PORT\]/$NODE_PORT/" default-http-backend.yaml.tpl \

> default-http-backend.yaml

Recreate the default-backend

kubectl create -f default-http-backend.yaml

Create new GCP service account

gcloud iam service-accounts create glbc-service-account \

--display-name "Service Account for GLBC"

Give the GCP service account the appropriate roles

gcloud projects add-iam-policy-binding ${PROJECT_ID} \

--member serviceAccount:glbc-service-account@${PROJECT_ID}.iam.gserviceaccount.com \

--role roles/compute.admin

Create a key for the GCP service account

gcloud iam service-accounts keys create key.json \

--iam-account glbc-service-account@${PROJECT_ID}.iam.gserviceaccount.com

Store the the service account key as secret

kubectl create secret generic glbc-gcp-key \

--from-file=key.json

Now we have everything setup to deploy Theia

kubectl apply -f theia.yaml

The docker image used is from here this repository gabihodoroaga/theia-apps

We need to open the firewall in order to be able to access our Theia

THEIA_NODE_PORT=$(kubectl get service theia-golang-service \

-o=jsonpath='{.spec.ports[?(@.name=="ide")].nodePort}')

NODE_ADDRESS=$(kubectl get nodes \

-o jsonpath='{.items[0].status.addresses[?(@.type=="ExternalIP")].address}')

GKE_NETWORK_TAG=$(gcloud compute instances describe \

$(kubectl get nodes -o jsonpath='{.items[0].metadata.name}') \

--zone=$ZONE --format="value(tags.items[0])")

# this must be your current address - not the cloud shell address

SHELL_IP_ADDRESS=$(curl http://ifconfig.me)

gcloud compute firewall-rules create allow-theia-golang \

--direction=INGRESS --priority=1000 --network=default \

--action=ALLOW --rules=tcp:$THEIA_NODE_PORT \

--source-ranges=$SHELL_IP_ADDRESS \

--target-tags=$GKE_NETWORK_TAG

Get the url to your Theia

echo http://$NODE_ADDRESS:$THEIA_NODE_PORT

and open a browser at this address.

Open up a terminal window from the menu “Terminal -> New Terminal” or press CTRL+`(backtick) and run

git clone https://github.com/kubernetes/ingress-gce.git

cd ingress-gce

To build the GLBC run

env CGO_ENABLED=0 go build -a \

-o bin/amd64/glbc k8s.io/ingress-gce/cmd/glbc

To run the GLBC you will need a configuration file

Go back to your local machine terminal and run the following commands

NETWORK_NAME=$(basename $(gcloud container clusters \

describe $CLUSTER_NAME --project $PROJECT_ID --zone=$ZONE \

--format='value(networkConfig.network)'))

SUBNETWORK_NAME=$(basename $(gcloud container clusters \

describe $CLUSTER_NAME --project $PROJECT_ID \

--zone=$ZONE --format='value(networkConfig.subnetwork)'))

NETWORK_TAGS=$(gcloud compute instances describe \

$(kubectl get nodes -o jsonpath='{.items[0].metadata.name}') \

--zone=$ZONE --format="value(tags.items[0])")

sed "s/\[PROJECT\]/$PROJECT_ID/" gce.conf.tpl | \

sed "s/\[NETWORK\]/$NETWORK_NAME/" | \

sed "s/\[SUBNETWORK\]/$SUBNETWORK_NAME/" | \

sed "s/\[CLUSTER_NAME\]/$CLUSTER_NAME/" | \

sed "s/\[NETWORK_TAGS\]/$NETWORK_TAGS/" | \

sed "s/\[ZONE\]/$ZONE/" > gce.conf

and then copy the file to your deployment

kubectl cp gce.conf \

$(kubectl get pods --selector=app=theia-golang \

--output=jsonpath={.items..metadata.name}):/home/project

and finally run the GLBC

./bin/amd64/glbc \

--v=3 \

--running-in-cluster=true \

--logtostderr \

--config-file-path=../gce.conf \

--healthz-port=8086 \

--sync-period=300s \

--gce-ratelimit=ga.Operations.Get,qps,10,100 \

--gce-ratelimit=alpha.Operations.Get,qps,10,100 \

--gce-ratelimit=ga.BackendServices.Get,qps,1.8,1 \

--gce-ratelimit=ga.HealthChecks.Get,qps,1.8,1 \

--gce-ratelimit=alpha.HealthChecks.Get,qps,1.8,1 \

--enable-backendconfig-healthcheck \

--enable-frontend-config \

2>&1 | tee -a ../glbc.log

Press ctrl+c to stop :)

If you want to create a test deployment adn ingress, go back to your terminal and run this

kubectl apply -f app-deployment.yaml

kubectl apply -f app-ingress.yaml

You can even run the e2e-tests in a separate terminal windows in Theia

# build

env CGO_ENABLED=0 go test -c \

-o bin/amd64/e2e-test k8s.io/ingress-gce/cmd/e2e-test

# update variables first

PROJECT=[project-222111]

REGION=us-central1

NETWORK=default

bin/amd64/e2e-test -test.v \

-test.parallel=100 \

-run \

-project ${PROJECT} \

-region ${REGION} \

-network ${NETWORK} \

-destroySandboxes=false \

-inCluster \

-logtostderr -v=2 \

-test.run=TestCDNCacheMode \

2>&1 | tee -a ../e2e-test.log

And prepare to wait, it will take really long time to finish.

Note: Almost everything that I used in this tutorial is collected from the actual project source code and scripts. I just put them together.

Cleaning up

Warning: Make sure to push/save your changes before deleting the cluster

# delete the cluster

gcloud container clusters delete $CLUSTER_NAME --zone $ZONE

# delete the service account

gcloud -q iam service-accounts delete \

glbc-service-account@${PROJECT_ID}.iam.gserviceaccount.com

# delete firewall rule

gcloud -q compute firewall-rules delete allow-theia-golang

# delete the compute disk

# MANUAL ACTION

Why

If anyone asks why I am spending time for kubernetes/ingress-gce project, the answer is that the best way to learn is to try to solve a real problem and after you solve it, let others to validate your work. I learned about kubernetes, ingress controller, gcp, cdn and gce a lot more than I would have learned if I was taking a course or just study the documentation.

Conclusion

Theia is amazing and it stands up to the claim of being “An Open, Flexible and Extensible Cloud & Desktop IDE Platform”. Try it and you will love it.